By: Rana el Kaliouby, Co-Founder and Chief Strategy and Science Officer

Over the past year, we’ve observed a shift in the dialogue with respect to emotion technology from the Why to the How.

Let me explain: People we encountered at events, conferences, and meetups went from asking “Why should I incorporate emotions in my digital experience?” to “How can I build emotion awareness in my digital experience?” There is now a much better understanding that making your digital experiences emotion-aware increases user engagement, which in turns leads to better outcomes, such as stickiness of an app or increased time spent on a website. Affectiva’s emotion sensing tools allow creators of digital experiences to build stronger connections with their users.

This blog is the first in a series of How To blogs that helps you “emotion-enable” your digital experience, be it a mobile app, a game, an application or even a movie!

First things first. What exactly does it mean to “emotion-enable” your digital experience? Broadly speaking, it means adding to any digital experience the capability to sense and adapt to the user’s emotions.

Emotion-enablement has two variations; think of them as two sides of the same coin:

- Insights and Analytics: In this case, your digital content or app collects user emotion engagement data by measuring moment-by-moment how the user responds. The data points are then aggregated (often when the session is complete), summarized and mined to yield insights into how engaging this experience was and how it could be personalized and/or improved.

- Real-time: This is when a digital experience collects user emotion data moment-by-moment and responds to the user’s mood in real time.

Real-time emotion enablement is the focus of this blog. How do you emotion-enable your digital experience or app? Here are the four simple steps to make your app emotion-aware:

[1] DECIDE ON WHICH EMOTIONS MATTER

Our SDK measures 7 emotions and 15 highly nuanced facial expressions. We also offer additional dimensions of emotion including Engagement, Attention and Valence. Valence (not violence!) is an overall measure of how positive or negative an experience is.

Not all of these are necessarily going to be relevant to your specific app, and you may not need to have all of them enabled, as this will affect speed. For example, if you are building a horror game that adapts to a player’s experience of fear, you may want to specifically focus on the following facial actions: eye closure and mouth open, as well as the emotion of Fear. Alternatively, if you are building an app that tracks whether people find video content humorous or gross you may specifically want to track smiles as well as smirks, nose wrinkler and brow furrow as well as the Joy, Disgust and Contempt emotion measures.

With the exception of valence, all the facial action and emotion measures have values that range from 0 to 100. The higher the number, the more confident the algorithm is that this expression is present. For example in a video content app, you might decide to consider only smiles that have a value of over 70% and ignore expressions that have a smile score below that. Deciding on the threshold really depends on how sensitive you want your app to be. Valence is measured on a scale of -100 to 100, where a negative value is perceived as having a negative emotion, and a positive value indicates a positive sentiment.

[2] APP DYNAMICS: IF THIS, THEN THAT!

Next, you need to spend some time thinking about the user experience, in particular, specifying how the actions, content or visuals within an app are going to change based on the user’s emotional state. Here are a few examples: A game where you have to threaten the guard to open the gates of a castle. The game dynamics would look something like:

void scareGuard(affdex::Face playerFace)

{

/**

* Check Affdex SDK's Face object confidence score

* for the current level of player’s observed anger

**/

if (playerFace.Emotions.anger > 80) {

Castle.Door.state = State::Open;

Castle.Guard.alertLevel = AlertLevel::Low;

Castle.Guard.scared = True;

}

}

Reactive narration where the movie scenes are different depending on your emotional response:

Scene selectSceneToPlay(affdex::Face playerFace)

{

/**

* Affdex SDK's Face object contains a confidence score

* for 7 emotions for each processed video frame.

**/

if (playerFace.Emotions.joy > 80) {

return SceneA();

}

else {

return SceneB();

}

}

An emoji keyboard where you select an emoji just by making a face:

Emoji selectEmoji(affdex::Face playerFace)

{

/**

* Affdex SDK's Face class contains the most likely emoji

* that represents the user's observed facial expressions.

**/

if (playerFace.Emojis.dominantEmoji == affdex::Emoji::Smiley) {

return keyboard::Emoji::Smiley;

}

else if (playerFace.Emojis.dominantEmoji == affdex::Emoji::Rage) {

return Keyboard::Emoji::Rage;

}

}

[3] SAMPLING RATE: HOW OFTEN DO YOU WANT TO SAMPLE?

All our facial action and emotion measures are moment-by-moment measures. In other words, you will get a reading per frame. So then, you have to decide how often you want to sample for emotion data. Acceptable sampling rates range from 5 frames per second to 30 frames per second. The specific sampling rate will largely depend on your specific application. For instance, if your app is already power hungry, or critical to be uber-responsive to the user, then you may want to sample at lower frame rates, like 10 frames per second. Also, if you want backward device compatibility, you may want to consider a slower frame rate (e.g. 5 FPS) for the older devices with less horsepower.

[4] INTEGRATION OF AFFDEX SDK

Once you have decided on the specific facial expressions and emotions you want your app to respond to, specified the app dynamics, and decided on sampling rate, it’s time to integrate our SDK into your digital experience or app. Our SDK can take control of the camera or if you already have control of the camera for your app, then you can just pass images / frames to our SDK.

NOTE: our SDK also works with photos and pre-recorded video, but that will be the subject of another How To blog.

The SDK was explicitly designed to be flexible and easy to use. On average, it takes developers somewhere between 2-5 days to fully integrate our SDK. Most of the work is in iterating over the dynamics of the app: defining the actions of the app so that the overall user experience is fluid and natural and not choppy. Think of when you are interacting with another person: sometimes you’re in sync and sometimes the whole experience seems “off” — it all boils down to the nonverbal cues.

We have provided a number of free sample applications to get you started. Check out developer.affectiva.com for more resources. Also feel free to email our team at: info@affectiva.com with any questions you have.

PRIVACY AND SECURITY

We realize that emotions are very personal and that most people do not want their faces recorded and emotions analyzed without explicit consent. At Affectiva we feel very strongly about asking people to opt-in to our technology. We require in our SDK License Agreement that developers explicitly ask users for permission to turn the camera on. It is also important to note that with our SDKs all the processing is done on device—no videos or images are sent to our cloud.

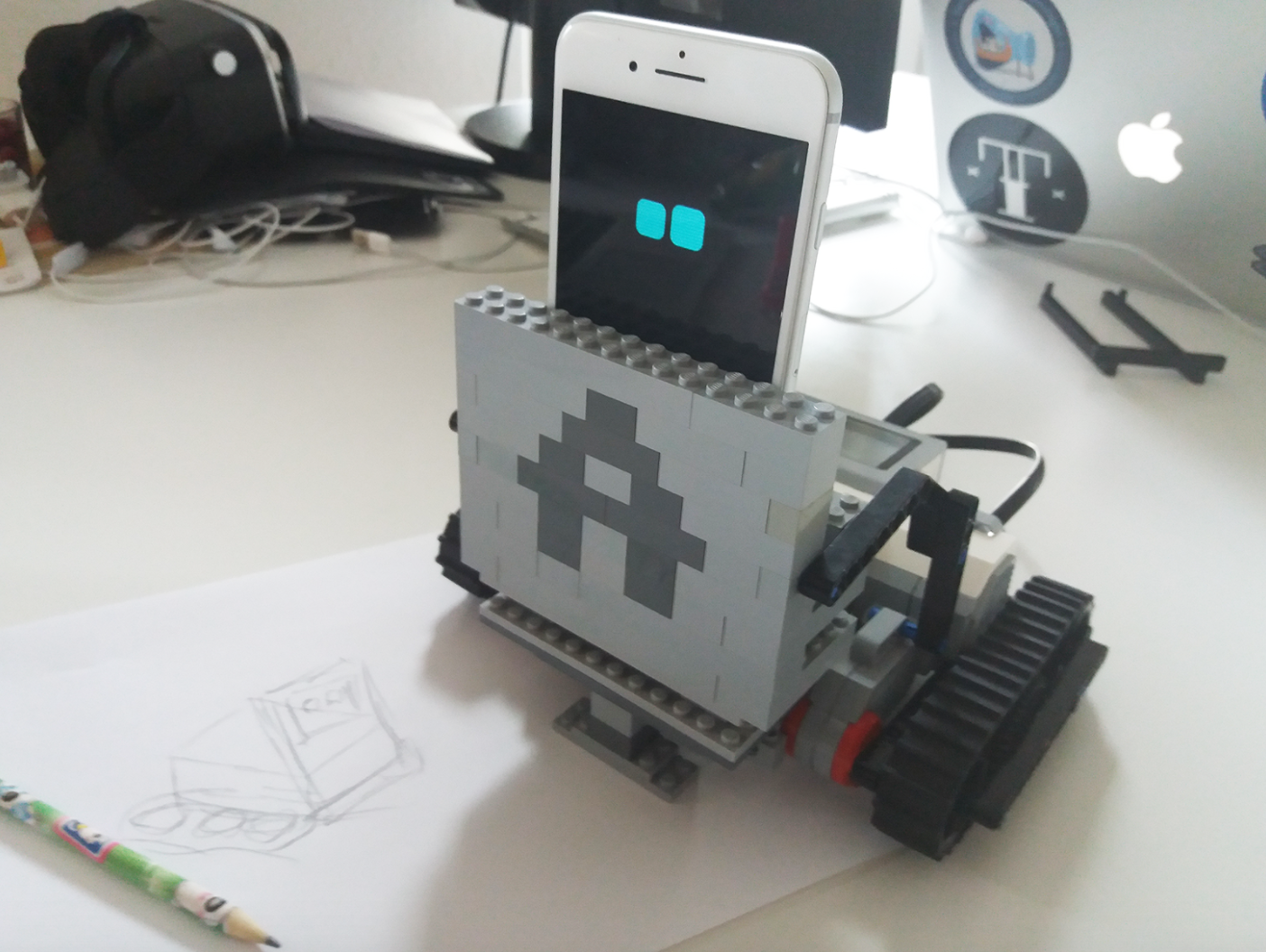

EXAMPLES OF AFFDEX EMOTION-ENABLED APPS

Here are a couple of examples of apps and digital experiences that have been emotion-enabled:

The Hershey Smile Sampler is a unique interactive retail experience, designed to surprise and delight the shopper at the point of purchase. This display initiates an emotion analysis session with the shopper: when the shopper smiles, the Smile Sampler dispenses a Hershey’s chocolate.

BB-8 is the new Star Wars droid. Sphero has developed a BB-8 robot that is available in stores now. Using our SDK we have augmented the behavior of the BB-8 remote-controlled robot using facial expressions and emotions. See the video here.

There are so many apps, digital experiences and technology products that can be emotion-enabled to make for more authentic, unique and engaging solutions: what will YOU build?

*UPDATE:

Affectiva is focused on advancing it’s technology in key verticals: automotive, market research, social robotics, and, through our partner iMotions, human behavioral research. Affectiva is no longer making it’s SDK and Emotion as a Service offerings directly available to new developers. Academic researchers should contact iMotions, who have our technology integrated into their platform.