Any disruptive–and successful–technology is bound to attract scrutiny, and that is only right. Affectiva has always welcomed scrutiny, as we have always been committed to building AI tools in the right way. So, some of the recent discussion in the media and market research industry about the utility of facial coding technologies is an extension of that. However, some of that commentary, particularly from some competitors, is both exaggerated and inaccurate. Arguments that facial analysis “doesn’t work” or is not scientifically supported simply does not apply to the Emotion AI that Affectiva creates, which has been in use for over a decade worldwide, and by >25% of the Fortune 500.

For instance, the suggestion that facial analysis does not offer meaningful insight into people’s reactions, is, just on the face of it (forgive the pun), nonsense. Are they really saying that in social situations we glean no information about others’ feelings and state of mind from their faces? That flies in the face of both everyday experience and decades of evolutionary theory and research.

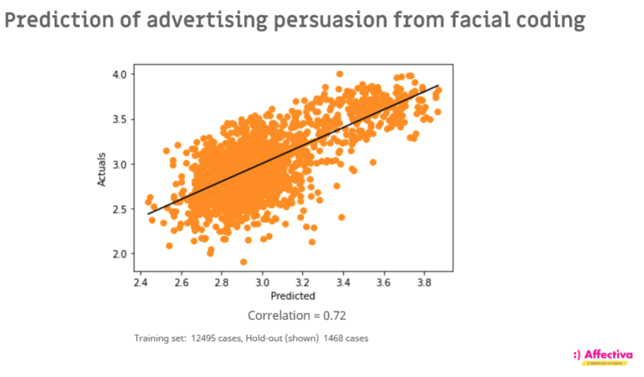

As for the idea that facial coding technology is inaccurate, we obviously cannot vouch for software created by others who have jumped on the Emotion AI movement that Affectiva started, but our accuracy is proven, has been published in peer-reviewed journals (see our science resources for more information), and constantly upgraded over the last decade, based on the uniquely diverse set of data we have processed (>6 billion facial frames and counting). Critically, we and our clients have also demonstrated clear relationships between facial expressions as measured by our system, and key behavioral outcomes, such as sales, advertising virality, memory and motivation. Facial reactions (at least as measured by Affectiva) can tell us something immensely powerful about people’s reactions, and what that means for future behavior.

Much of the debate seems to us to stem from a misrepresentation of the scientific consensus about the relationship between facial expressions and emotional states. There is an ongoing scientific discussion about the specificity of facial signals – and the extent to which they vary by context and culture – but this is far from reaching a consensus, and a long way from suggesting that facial coding is “not scientifically supported”. The fact that Affectiva’s technology alone is in use in over 600 academic institutions around the world suggests that the scientific community is hardly abandoning facial analysis as a discipline. It’s also worth noting that the majority of Affectiva work focus is on identifying the 20+ individual building blocks of expressions (smiles, eyebrow raises etc), not the 6 “classic” emotions that authors such as Lisa Feldman-Barrett take issue with. While it is true that a smile can mean different things in different contexts (e.g., happiness or embarrassment) or a frown might mean distress in one context or confusion in another, there remains extensive evidence, both historical (e.g., Paul Ekman’s work) and recent (e.g., Alan Cowen and Dacher Keltner’s 2021 paper in Nature) that Expressions are cross-culturally consistent, and that generalizations can be drawn from them about emotional & cognitive states. Indeed, our own analysis of data drawn from over 13 million face videos from more than 90 countries clearly shows that the same patterns of facial response to content appear all over the world.

We would agree that understanding the context to which someone is reacting is important to help interpret facial signals, but crucially Affectiva’s technology is applied by analysts with full knowledge of that context. In our Media Analytics applications, analysts know the entertainment or advertising content that participants are reacting to, and use that knowledge to inform interpretation (is this scene funny? Is the ad confusing?). They also can refer to our extensive cultural norms (90+ markets), and often survey answers, to further help inform meaning. No single method gives the whole answer, and a multi-modal approach combining different metrics always yields the deepest insight.

Indeed, there seems to be double standard at play here, some proponents of other methods, such as EEG, or pictorial survey questions, seem to denigrate facial data because there are multiple interpretations available for the signal. That applies to all research methods–survey answers can be manipulated by phrasing and are massively influenced by culture; getting people to pick the face that represents how they feel on a survey is the same as asking a direct, “System 2” question, and as anyone who has tried to interpret an EEG or GSR signal will tell you, there are many reasons why spikes in the lines appear, which often have nothing to do with emotional response. At least most people know what a smile means–you must be a neuroscientist to explain a brainwave signal, and most research users are not. That is, some facial expressions are “explainable,” while some of these other signals are not. And are we really going to wind back the research industry clock to the 1990s and recruit everyone to venues, just so we can stick electrodes on them?

As ever, we, and research users, want things to be simple–but the truth is all biometric and survey methods have their strengths and weaknesses. There are things that the face cannot tell us–but there is a very wide array of signals (we measure 30+ discrete metrics) that we can glean, and only facial coding can give in-the moment reactions at the kind of global scale and speed that research users need. Affectiva has researched over 70,000 ads, trailers, films and episodes, in over 90 countries, analyzed in near-real time, integrated with surveys and other audience signals – a flexibility that many other approaches simply cannot match.

A final word on very legitimate concerns that many people have: ethics and privacy. Some organizations lump facial coding technology in with Facial Identification technology and worry about its use for surveillance or profiling. For clarity, Affectiva facial technology cannot and does not identify individuals – all face videos are collected and processed with explicit consent and anonymized. We do not license our technology for use in surveillance or for security purposes. There are real and legitimate concerns about privacy for computer vision technologies, but we have always taken great care to avoid such issues and are always willing to engage with organizations with such concerns.

This is a long post, so thank-you if you made it this far–the issues raised recently are both important, but often misrepresent both the science and the state of the art. Affectiva’s technology has been used by thousands of businesses and academics very successfully over the last decade, and they continue to find it insightful, valid, and uniquely scalable. A multi-disciplinary approach will always yield the best answers, but our Emotion AI remains the most scalable, and cost-effective way to generate in-the -moment understanding of people’s intuitive responses to experiences, ads, and content.