Every day, there are more than 1,000 injuries and nine fatalities caused by distracted driving in the U.S. alone. With the deployment of autonomous driving capabilities, OEMs and Tier 1s face even more safety concerns and considerations, forcing the industry to redefine transportation at the core.

AI is driving the future of automotive, playing a critical role in self-driving systems and morphing cars into software platforms on wheels. Using cameras, sensors, complex deep learning algorithms, and massive amounts of data, AI enables autonomous vehicles to orient their position on the road and recognize other vehicles and pedestrians around them. While AI in automotive has historically focused on what’s going on outside of the vehicle, car manufacturers are beginning to turn cameras and sensors inwards, using AI to gather insight on what’s going on with the people inside the vehicle.

To improve road safety, OEMs and Tier 1s must have a deep understanding of driver emotions, cognitive states, and reactions to the vehicle systems and driving experience. Driver monitoring systems can accomplish this if they are reliable, accessible and available. But how?

Reliability: Real Time, On-Device

Driving a car is the epitome of real time: you simply can’t spare the seconds it takes for data to be collected, sent to a cloud, and analyzed in order to make a decision on what the car will do in a certain situation. This is also especially if the driver is impaired in some way, and those seconds can leave too much room for tragedy.

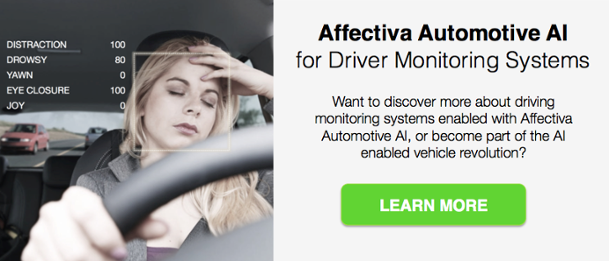

Today, Affectiva Automotive AI helps to augment driver monitoring systems with powerful driver drowsiness and distraction detection. Our deep learning models provide accurate, real time estimates of emotions and cognitive states on mobile devices and embedded systems. We’re focused on building highly efficient models that achieve high accuracy with a minimal footprint. Our approach also involves joint-training across a variety of tasks using shared layers between models combined with iterative benchmarking / profiling of on-device performance, and model compression - training compact models from larger models. Read more on the science behind our technology here.

Accessible: Data Provided Informs Potential Vehicle Actions

Driver monitoring systems on the market today are still in their infancy, and there’s a significant gap when it comes to effectively recognizing complex and nuanced cognitive and emotional states. Using AI and deep learning, Affectiva Automotive AI takes driver state monitoring to the next level, analyzing both face and voice for levels of driver impairment caused by physical distraction, mental distraction from cognitive load or anger, drowsiness and more. With this “people data”, the car infotainment or ADAS can be designed to take appropriate action. In semi-autonomous vehicles, awareness of driver state also builds trust between people and machine, enabling an eyes-off-road experience and helping solve the “handoff” challenge.

Available: Affectiva Automotive AI

Affectiva Automotive AI is the first AI-based in-cabin solution that measures in real time, from face and voice, complex and nuanced emotional and cognitive states of a vehicles’ occupants. Built on industry leading AI technology, the solution is designed for automotive environments. Delivered as a software development kit (SDK) it runs in real time on embedded and desktop platforms. It supports RGB and Near-IR camera feeds and various camera positions. Read more about data, metrics, and integration.

The Bottom Line

Using cameras and microphones, Affectiva Automotive AI unobtrusively measures, in real time, complex and nuanced emotional and cognitive states from face and voice. This next generation in-cabin software uses deep learning and real world driver and passenger data to enable OEMs and Tier 1s to measure driver impairment and occupant mood and reactions.

Want to discover more about Affectiva Automotive AI enabled driving monitoring systems or become part of the AI enabled vehicle revolution?