At the Emotion AI Summit, keynote speaker Danny Lange delivered a talk on the Emotion AI Loop in Next-Generation Digital Experiences. Lange, who is the VP of AI and Machine Learning at Unity, discussed how Deep Reinforcement Learning is ready to disrupt digital experiences.

Traditionally, user experience development is a complex and labor-intensive effort. Take for instance a game which involves environments, storylines, and character behaviors that are carefully crafted - requiring graphic artists, storytellers, and software engineers to work in unison.

In this process, Reinforcement Learning is becoming an increasingly powerful tool in its ability to dynamically adapt and optimize game behavior from the feedback loop. Typically, this loop is quantitatively driven by minutes of user activity and in-app purchases. But what if we did not stop there - imagine what happens when we turn this loop into a flywell of increased emotional engagement, excitement, and joy - something we could call the Emotion AI Loop. Let’s take a look at some of the key points covered in Danny Lange's Emotion AI Summit talk.

How you play games says a lot about you

Much of the work of Unity is to facilitate the connection between players and games they would like. Unlike social media accounts like Facebook and Instagram, people don’t really log in when they play a game - games just get interactions. However, there are techniques at play to learn player behavior in more advanced ways, including how players interact with a game, which tells a lot about you. Conversely, tons of developers have created millions of games - but how are those matched up with the right players? There are many undetected games out there that don’t get found by a lot of players who would really love them - and that’s where machine learning (ML) comes in.

How do we make computers learn instead of coding them?

According to Lange and his time at Amazon, the most popular algorithm there was the multi-armed bandit algorithm. This is based on the rewards concept similar to slot machines in vegas - you play machine most likely to give you a good return. But, like in Vegas, it’s going to cost you, as you are not sure which machine will give you the most reward.

Amazon uses this same approach when they try to sell you things - they know some items are going to go easier than others. Amazon tries to explore what it already knows about your past purchases and figure out how to get you to purchase again - or, get the “reward”.

The Multi-Armed Bandit Algorithm

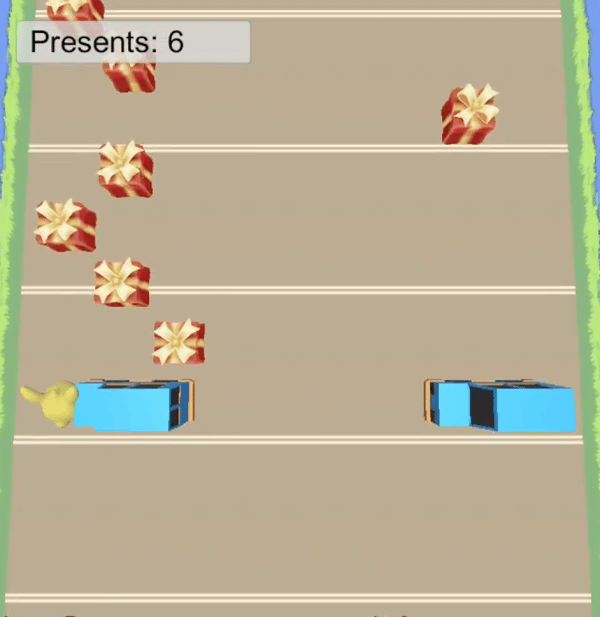

Lange illustrated the multi-armed bandit algorithm using an example from Unity: where he designed a game for a chicken to safely cross the road and posed it to an algorithm using deep reinforcement learning. They told the computer it had 4 options, and that it would get a penalty every time the chicken gets killed by a car, but get a reward every time it picks up a gift package. The computer doesn’t know anything about the chicken, or what a chicken is - but is trying to learn that, too.

This approach is similar to Amazon before it knows who you are. The algorithm has 2 pieces of info - get the package (good) get killed by a truck (bad). In an hour, the computer figures out good, and does get killed from time to time, all learned from scratch...and after 6 hours becomes superhuman.

Lange calls this the AI Loop - as there are no physicists, software engineers, psychologists involved in this process. Not a lot of human intellect is engaged in figuring out how to get chicken to cross the road...it’s just trial and error, exploitation & exploration. The computer is served actions, rewards function, then learns through a decision of exploring and transition to exploit.

Optimizing the Rewards Function

In these cases, we don’t actually know the function that the system develops - and since we use deep learning in this, it’s interesting to see non-linear functions utilized to detect non-obvious relationships. So in some games, the system will learn something called “Long Term Value”, which is why it makes decision very early on when it is doing something with the objective of a bigger gain at the end.

In the gaming example, Lange explains that this algorithm plays millions of games and has learned to get away with some really clever moves, it must first do a couple of really bad moves. The computer has learned that in playing with humans over time that by doing these bad moves, the human player loses the attention to what the computer is doing and starts playing their own game because now they are going to “beat this thing”, because this “thing” has made a mistake. Yet the computer actually has a plan, and ramps it up with some good moves and the human loses. We have seen this strategy come into the picture many times.

The point here is to talk a bit about the rewards function. Many game developers say they’ve been there, done that, but this thing doesn’t work because it becomes superhuman and it’s not fun. That’s because if your rewards function is kill your human opponent as quick as possible - and Lange agrees that indeed, that is not fun.

But imagine what would happen when you build a game where the rewards function is to satisfy minutes of playtime. If the character in the game is exploring, and the computer comes right out and shoots you, that’s not a lot of time to play. While still in exploration mode, the computer will try more strategies, like hiding out, so that the human will try to find them. But humans will get tired of that after a while and will quit. So the 3rd strategy the computer tries is where the human will almost get the computer, then it will disappear, then show up again, then it will interact with the human to get more playtime. What’s important here, again, is that the computer is not a psychologist, it is not a game designer sitting and doing this - it is purely driven through the rewards function.

You can see where this rewards function is valuable - what if we optimized the rewards function for in-app purchases? Games are often designed with actions around in-app purchases that make the gameplay easier for 60 minutes, then harder again to train the player’s brain to make in-app purchases. So if the computer could learn to both maximize play time and drive in-app purchases, the computer could figure out ways to do that. Playing against billion of people out there and figuring out the best ways to drive these actions.

If the Affectiva SDK is in a Unity game, a developer would be able to watch a person play but rather than using to engineer a solution (if user smiles, I do this) they can have the game learn to interact with the user rather than forcing a certain interaction model. The game could figure out what makes users laugh, smile, pay attention, and combine all of that with rewards function to drive gameplay.

Next Steps: Advice for Developers

All of the above boils down to 3 steps that developers can use ML: 1) have 1 or more characters trained to set computer vs. computer, 2) let the character see examples of human behavior and use imitation learning to let character re-train. Show the computer how to interact and it will learn from that 3) set the character to play against humans and learn against those interactions.

For more from Danny Lange’s talk from the Emotion AI Summit, “The Emotion AI Loop in Next-Generation Digital Experiences”, you can download the session recording here.

About Danny Lange

Prior to Unity, Lange was head of machine learning at Uber, where he built & rolled out company-wide machine learning systems. He spent a lot of time working on emotion-enabled cars, and teaching cars how to drive. Before that he was also a general manager at Amazon for machine learning, where he had over 100 teams across Amazon using a unified machine learning platform. An accomplished author and speaker, Lange is also the author of numerous patents.