Imagine you’re in a noisy, public place. A girl from afar is trying to communicate with you. You can’t hear her because of the surrounding noise, but you can see her clearly. Just from her facial expressions you can gain a pretty good understanding of her emotional state. Now picture a different scenario: this girl is standing close behind you. You can’t see her face anymore, but you can hear what she is saying. Even without seeing her face, you can still draw a fairly accurate conclusion as to what her emotional state is based on her speech.

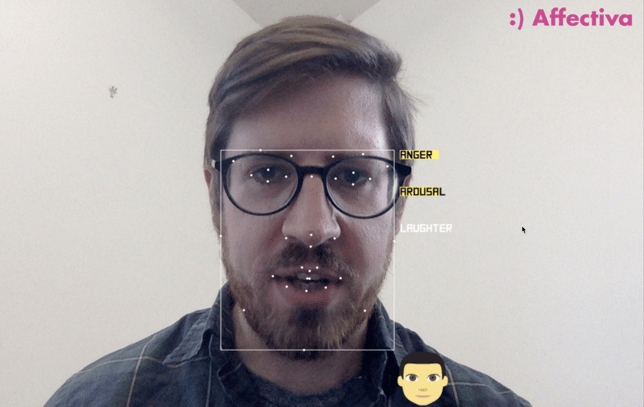

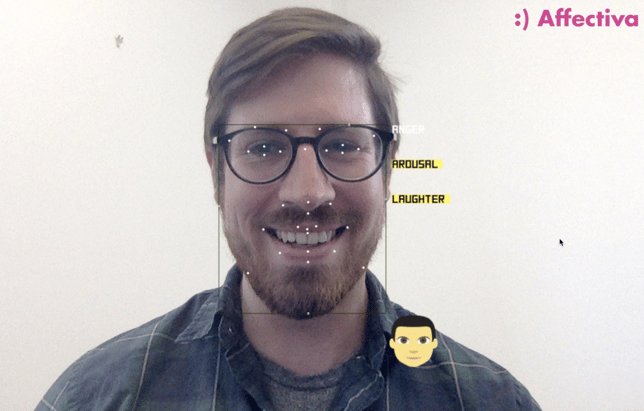

Both of these methods of interpreting emotions work well, and Affectiva has already established itself as a reliable emotion interpreter of facial expressions. However, there’s no question that the best scenario for formulating the most trustworthy emotion analysis would be one in which you can hear and see the speaker. So, it only makes sense that Affectiva is now diving into this new form of emotion recognition via speech.

We spoke with our lead speech scientist, Taniya Mishra, about her work in emotion recognition from speech. Affectiva recently announced this speech capability at our Emotion AI Summit.

Why speech analysis is important for Emotion AI?

To frame it very generally, the more technology is used to power social robots or AI powered assistants, the more natural we want the interaction to be and, therefore, the more human-like these systems have to behave. Affectiva has already begun addressing this challenge by analyzing emotions via facial expressions. However, the face only tells part of the story: we express emotions through multiple channels - not solely through our faces.

According to the 7-38-55 Rule of Personal Communication, words influence only 7% of our perception of affective state. Tone of voice and body language contribute to 38% and 55% of personal communication, respectively. Given that, we know that when we’re only looking at the face, we are only covering one channel to estimate emotional state. We want a second channel of information. So, as a result, Affectiva is now looking at speech. We want to estimate emotional state using multiple modalities. This is why we’ve moved from using just face to using both face and voice.

Goals for Speech in Emotion AI

To start out, we want to measure the six basic emotions: anger, disgust, fear, happiness, sadness, and surprise. You can imagine if someone is getting angry or frustrated while they’re talking to a social robot or AI powered assistant like Siri. It should recognize that you’re mad and say something like, “Am I not understanding you?”

We want to measure interesting speech events, such as laughter, sighs, grunts, or filled pauses like “um” or “uh.” Each of these events signal the emotions and attitudes that the speaker wants to convey. For example, filled pauses often signal that I’m still thinking and am not ready to give up my turn in the conversation (see “floor-holding”).

We want to estimate speaker characteristics like their gender, age, and accent. Let’s say a child is using Amazon’s Alexa to order five dolls; the system should be able to recognize that this is a child and say something like, “Let’s check with your parents.” So, knowing the approximate age, and other demographic information, can be very useful for automated personal assistants to appropriately process and respond to user requests.

The Process of Analyzing Speech for Emotions

We are framing this as a machine learning problem where we want to build models that can recognize people’s emotions from their voice. We are taking a deep learning based modeling approach. The reason we are using deep learning is because in the last several years use of deep architectures has shown unprecedented success in several areas of speech processing including speech recognition, synthesis and translation. We were particularly encouraged by recent academic publications that reported very promising results when deep learning was applied to this problem. A particularly attractive feature of deep learning architectures is that they can consume raw data as input (such as images or audio files) and learn discriminating features from the data as part of the end-to-end optimization process, without requiring human experts to perform feature engineering on the data before the training phase. Thus, we train our emotion estimation models in an end-to-end manner using deep learning models to automatically extract features of small and large granularity from the input speech.

The Challenges in Estimating Emotions from Speech

Deep learning is a data hungry methodology. It has been so successful for speech recognition because there are large quantities of data available. With emotion estimation, one of the biggest challenges revolves around the availability of data. Training a deep network requires large quantities of data — often in the order of tens of thousands to millions of samples — whereas emotion-labeled datasets are inherently small due to issues surrounding data collection and data annotation.

Collecting natural emotional data is challenging because this type of data is very private. Either people do not want to share it or even if they do, put in front of a camera or a microphone, people instinctively become very guarded in their emotional display or very awkward, making collection of naturally occurring emotional speech a challenging issue. Secondly, even if you have data, labeling is both time consuming and somewhat noisy. We can only expect models to do as well as the human labels can do, and humans only agree with each other as to what the perceived emotion is 70-80% of the time. This discrepancy is because perception of emotions is affected by the alignment of language and culture between the displayer and the observer of emotions.

In our modeling approach, the lexical content of the words used in the spoken utterance is not used for model training, rather the models are trained on the paralinguistics, tone, loudness, speed, and voice quality that distinguishes the display of different emotions. However, when you give people the task of labeling emotions, language matters. What if I say something very positive but in a very low, negative intonation? What do you do? It’s very hard for people to separate out the meaning of your words from the tone of your voice. Thus, the lexical content can bias the human labelers’ judgement regarding the emotion displayed. Culture of the displayer and the perceiver can pose similar challenges in acquiring human annotations. Both of these challenges: acquiring data in which emotions are naturally displayed, as well as getting human annotations of that data become exacerbated when we want to develop large datasets for training deep learning networks.

Our approach for skirting these challenges while acquiring naturally occurring emotions in speech is this: We collect audio and video datasets of people conversing naturally, and mine them for emotion bearing segments. These datasets could have been created with a particular intent (such as datasets that were developed for speech recognition) or just for fun and communal enjoyment, such as plethora of interesting videos that people share publicly. As long as it is data in which people are communicating naturally, we are interested in it because it is bound to contain emotion-bearing segments. We first automatically label such audio data with noisy and weak labels; then identify the audio segments that are likely to be emotional based on the weak labels; and finally verify the labels using expert human labelers. The weak labeling approaches that we consider include use of the meta-data surrounding the audio/video as well as automatic unimodal or cross-modal bootstrapping of labels evinced either manually or automatically. We use human labelers, who are linguistically and culturally similar to the the subjects displaying the emotions as well as those that are dissimilar. Currently for training, we use segments on which there is high agreement between the labelers, however we are also exploring methods of modeling the variance in perceptions of the latter and the former in a way that models can learn.

The Bottom Line: Making Emotion AI Even More Robust with Speech

In order to power natural human-machine communications, systems need to make sense of emotive display in humans and respond appropriately. Humans use multiple channels to display emotions, and our emotion display is not uniform - sometimes we rely more on the face, other times we display more through our voice, or even our gestures and body movements. Anger is usually displayed more through your voice than through your face. It might be difficult for a system to recognize anger from just the face, but pair the facial display with voice and now the system can detect anger much more reliably. The opposite is true for joy: people usually use their face to display joy, but they don’t do that much with their voice --- unless it is an extreme joy and mirth that brings about laughter and a vocally aroused voice. So in this case, voice alone will not give you a good enough estimate of someone being simply content and happy. These are just two examples; you can easily think of other examples when having both modalities present helps both humans --- and thus, machines --- better understand emotive display. But there are also situations when one or the other modality is either noisy or inaccessible.

These considerations taken together is a perfect reason for why we need to estimate emotions multimodally. At Affectiva we are serious about estimating emotions and we want to build systems that are robust and able to use whichever channel of information is available, where it is face or voice, or both.