Guestblog by: Erin Reynolds, Founder, President, and Creative Director of Flying Mollusk

I’ve been a game designer for over ten years – which is almost centuries for the game industry. I’ve been a game player for much, much longer than that. I love playing video games almost as much as I love making them. It’s an exciting time to be both a developer and a player and of all the new technologies that are blossoming, none excite me more than those that allow the game to connect with the player on a physiological and emotional level.

CONNECTING ON A DEEPER LEVEL

Games make us laugh. They make us feel exhilarated. Sometimes they make us feel a sense of awe by experiencing their worlds or even melancholy by partaking in their stories. Games have an incredible power to take us to places, compel us to take action, and inspire us to perceive things in ways that we never would (or could) have done otherwise. Games have the potential to provide powerful, moving, dynamic experiences that take us on the wildest of journeys.

The thing is, traditionally, we could only communicate with these vast worlds, painstakingly crafted characters, and deep narratives through cold button clicks, finger swipes, or even in some cases, full body gestures. We could participate in a strange interpretive dance that, usually, just involved our thumbs.

However, we know that real communication is a two-way street that involves not only conscious actions, but also emotional cues and the subconscious signals they contain. Emotion recognition technology like Affectiva’s Emotion AI software now enables games to identify those subconscious emotional signals – giving developers the ability to create experiences that communicate with the player on a more natural and profound level. For example, players could interact with a character not only by using pre-written dialogue options, but also via a subtle arched eyebrow of disbelief or a grin of approval. Digital environments could blossom in response to the player’s happiness or become more muted when the player expresses sadness. The possibilities of meaningfully integrating emotion recognition into a video game experience are truly limitless and – I believe – critical to the overall evolution of the medium.

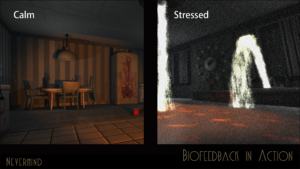

Biofeedback response in the Nevermind game.

PERSONALIZED EXPERIENCE

While I could write for days about all of the possibilities that emotion recognition can offer gaming as an artform and creative cultural medium, there are of course some more practical applications to it as well. First and foremost, if a game can tell how a player is feeling, it can create a more personal and responsive experience in real time. Perhaps the easiest example to imagine is real-time difficulty adjustment. If the game recognizes that the player is becoming frustrated or aggravated, it can adjust itself on the fly to become easier for an overwhelmed player. Alternatively, if the game determines that the player is bored, it can adjust to become more challenging. Emotion recognition capabilities empower games to “listen” to their players and react on the fly to those subconscious signals.

ALWAYS IMPROVING

Up until very recently, video games were – by necessity – one-size-fits-all in both design and execution. A game would be designed and developed, sent to manufacturing to be printed onto discs or cartridges, and then released into the wild. Once it’s out there, all you could do as a developer is hope that most players who pick it up love the game as-is, because there wasn’t a whole lot you could do about it. Sure, you could offer the player a few manual customizations like difficulty options, but that could offer only so much if you discovered that your game was poorly balanced or, goodness forbidding, not fun.

Thankfully, things are much more flexible these days. Many games – especially those available via phones or web browsers – report real-time data back to the developers and, from there, developers can tweak and tune the experience to be better and release updates at any point in time with those improvements. However, even then, the data is limited by what the computer can pick up from the player via “standard” input – we’re talking about things such as keyboard presses, mouse clicks, the time it takes to go from screen A to screen B, etc. Developers can make good assumptions about what it means when, say, a player takes 5 seconds longer to press the blue button than expected but, at the end of the day, they’re still just educated guesses.

Now, if a game can report back not just the discrete inputs of a player but, additionally, his or her emotions – that’s incredibly valuable data that gets to the heart of what designers and developers need to know! What’s going on in the player’s head – what is he or she feeling? We can finally know if a player is having fun, if the experience (or even just a part of the experience) makes him/her feel happy, sad, frustrated, elated, and everything in-between.

It means that we, as developers, are at last able to improve our game experiences based on the data that counts most to be as fun and impactful as we imagine them to be and that our players are having the best gameplay experience possible. That’s some pretty powerful stuff.

SO, WHY DO WE NEED EMOTIONALLY SENSITIVE GAMES?

Emotionally sensitive games are capable of becoming an elevated art form that delivers the most enriching and engaging experiences possible. They can leverage the most valuable data, instantly respond to each player as a unique individual, and open up the conversation between user and media in a way that is nuanced, intimate, and unlike anything most of us have ever experienced in art and entertainment.

I think that’s pretty amazing. Welcome to the future.

Follow Erin on Twitter: @reynoldsphobia