In the first 5 years of Affectiva’s existence, we focused primarily in the market research space - that is, providing MR companies with emotion analytics to flag how subjects responded emotionally to brand advertising. While this is still core to our business, we found additional organizations demonstrated interest in the capabilities of Emotion AI - so we built an SDK to help meet their needs.

Among these inbound requests, we noticed an increasing amount of companies representative of the automotive industry. They were looking for ways that emotion recognition could help improve car safety, leverage emotional data to better understand the user experience of the car driver and its passengers, and deepen understanding of the consumer for car OEM’S and suppliers.

This has brought us to the next generation of cars - and while these are interesting concepts, they will not be part of some futuristic vehicle design. In fact, car manufacturers are adding these capabilities as features to existing cars. The Emotion AI applications for cars are endless: from drowsiness detection for driver safety, to identifying whether driver is attentive or distracted while driving, and to building a highly personalized and intimate in-cab experience. This highly personalized in-cab experience is the next battle for the consumer hearts and enhanced loyalty to the car brand. Additionally, the ability to accurately detect emotions is being viewed as a significant enhancement to the human-machine interface in vehicles. Imagine the use cases for an emotion-aware infotainment system.

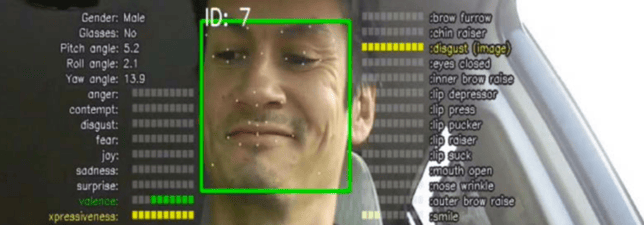

Being able to recognize those actions for drivers or passengers can help smart cars to better understand what’s going on inside the car. Then based on those observations, control can pass between the smart car and driver - or triggers can be put in place, where an alarm goes off to the driver if he becomes drowsy before he drifts from his lane. These features not only can enhance the road safety but it will enhance the driving experience of people for next generations of family cars and public transportation.

To help breakdown the work we are conducting with leading automotive companies on these emotion recognition capabilities, here’s what we’ve done so far and our vision for functionality in this space.

But we have 5 million faces analyzed already...Why Reinvent the Wheel?

As we mentioned, Affectiva has been very focused in the market research space. And with over 5 million faces analyzed from hundreds of facial videos captured with market testing - why start over with automotive? There are a few reasons:

1) The market research industry has a very specific setting for data. Most often, participants are viewing ad stimuli from a laptop or portable device, where they view a specific distance from their face, the lighting is considered controlled, and a specific angle of face is expected.

2) Also for automotive, length of video was important - as subjects to be analyzed would need to be further documented than the average video ad length of 30-60 seconds that most market research subjects are required to view. This meant we needed longer videos to more appropriately map to the automotive use case.

3) Lastly, the environment and situation of market research subjects vs. those actively driving a car vary substantially. If you are sitting at a table or on your couch watching an ad on your laptop, your body position, expression, and actions will all change dramatically when actively operating a motor vehicle: looking for street signs, waiting at a streetlight, keeping an eye out for obstacles, talking on a (hands free) phone.

While we can still leverage the largest repository of emotion that we have at our disposal for testing purposes - we understood the importance of a truly robust model for this specific type of data. With all the data that we are able to collect and annotate, it further helps to train our model against challenges that we’ve identified. This process will help us to continuously tune and improve our data model to have a better and more reliable system for in-car applications.

Gathering the Data

We’ve collected data internally (in-house data) for our major automotive partners. For early tests, we personally collected this data from 27 drivers, and 1.16 million frames. We used subjects from a variety of ethnicities (caucasian, east asian, south asian, african and latino) of various ages, most of which fell within the 35-55 age range.

When thinking about how we would collect this data, we rolled this out in 2 phases. First, we collected data internally by asking team members to drive in a car with a camera that we’ve mounted to go through scenarios where we could gather “posed” expressions. These included making expressions for the 6 emotions, look over the shoulder, check phone, etc.) Second, we just had drivers spontaneously drive with no instruction, to capture “in the wild” data of how these people would normally emote while driving the car. For both instances (posed and genuine, or spontaneous) we captured between 40-60 minutes of driving video in Japan and the US.

Challenges in analyzing emotion data for cars

While we’ve covered a little bit on how driver data is slightly more challenging than the data our algorithms are used to analyzing, here are some other considerations we had to keep in mind (or figured out!) when gathering driver data:

- In-cab lighting changes on the driver’s face. Windows allowed for different streams of light to hit the face at different times of day sporadically as drivers navigated their way through streets. At certain times of day, a bright sun can wipe out a driver’s face. Conversely, lighting inside of a car at night is very (purposely) poor as drivers must be able to see the road & obstacles around them. We are exploring alternate ways to accurately capture facial muscle movements without the use of light at all.

- Pose changes. From looking into the rearview mirror to rubbernecking that accident on the highway, there are instances where head-on viewing isn’t possible. People also talk on the phone, and eat while driving. We had to account for these different head poses when interpreting facial expressions for emotion.

- Position of the camera. We experimented with multiple locations for the camera that would capture driver expressions in the most optimal way.

- Presence of Occlusions to the Face. These are things like visors on baseball caps, big sunglasses (though our technology can read through regular eyeglasses), when drivers cover face with their hand or a cellphone.

In all of these challenging scenarios, we knew that we had to develop a system that is robust for all use cases is important - and in many instances, we were able to solve for them.

Labeling Emotion Driver Data

We have a pretty interesting and exhaustive data labeling process (stay tuned for another blog on that!), and this process was similar to what we do with labeling any facial video. We have a certified labeling team in Cairo to go through and annotate all the data that we’ve gathered. Their process is a rough estimate on frame-based annotation where they label emotions (for example, is this person happy or not?) Then they annotate for appearances, such as for age, ethnicity, and gender. Multiple labelers annotate each frame to assure the most accurate annotation possible. Frames are then put through a Q&A process to pass the frames labeled, or do address the frames the labellers were not confident in or where lack of agreement existed among labellers.

On the subject level, we found some people to be very expressive. These individuals went through a whole range of emotions as they made their way to their destination. Then you have another category of people who are mostly neutral throughout their journey.

Building Driver Data Analysis to Scale

This is where the beauty of our multi-platform SDK comes in. The advantage to our SDK is that emotion recognition can run on device, in real time - without the need for internet access. Our model can run locally on the car, and does not record subjects, but runs real-time facial expression analysis only. This wIll help us to scale globally for use on all kind of cars. The fact that we can get feedback in real time will also help us to scale.

What’s Next: Affectiva Driving Emotions with Automotive Partners

This is only the beginning of what we are able to do with driver data. The future of vehicles will be one where your car will be connected to your emotional profile: that is, possibly using other open data sources such as weather and traffic to build the most optimal in-cab experience for the driver. Developing AI system capable of monitoring the driver’s emotional state requires collecting data into our growing driver emotion database. If you are in the automotive industry and would like first access to this rapidly growing market of the emotional car, contact us to learn more.