Artificial emotional intelligence, or Emotion AI, is also known as emotion recognition or emotion detection technology. Humans use a lot of non-verbal cues, such as facial expressions, gestures, body language and tone of voice, to communicate their emotions.

At Affectiva, our vision is to develop Emotion AI that can detect emotion just the way humans do. Our long term goal is to design more intuitive tools that builds a deeper understanding of human perception AI.

Let’s dive into our exact emotion metrics that we offer, how we calculate and map them to emotions, and how we determine accuracy of those metrics.

Affectiva Emotion Metrics

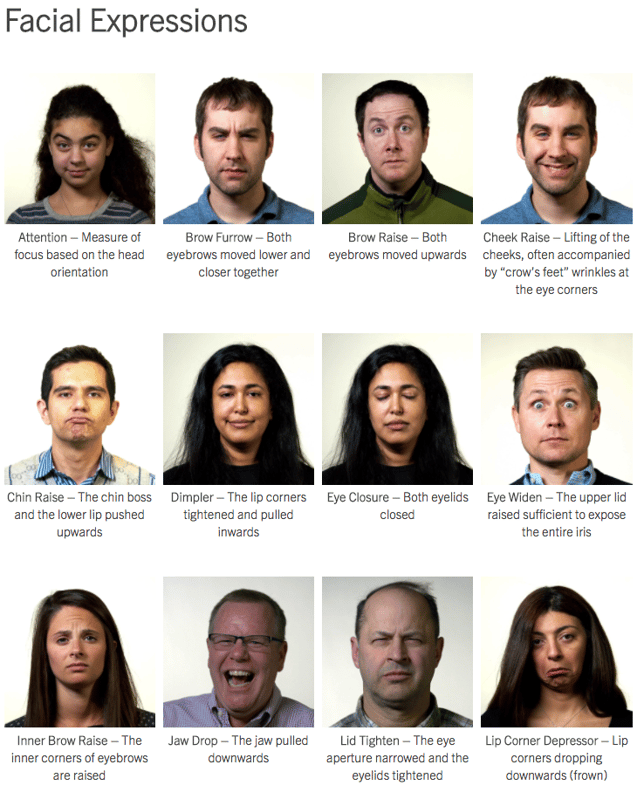

The face provides a rich canvas of emotion. Humans are innately programmed to express and communicate emotion through facial expressions. Our technology scientifically measures and reports the emotions and facial expressions using sophisticated computer vision and machine learning techniques.

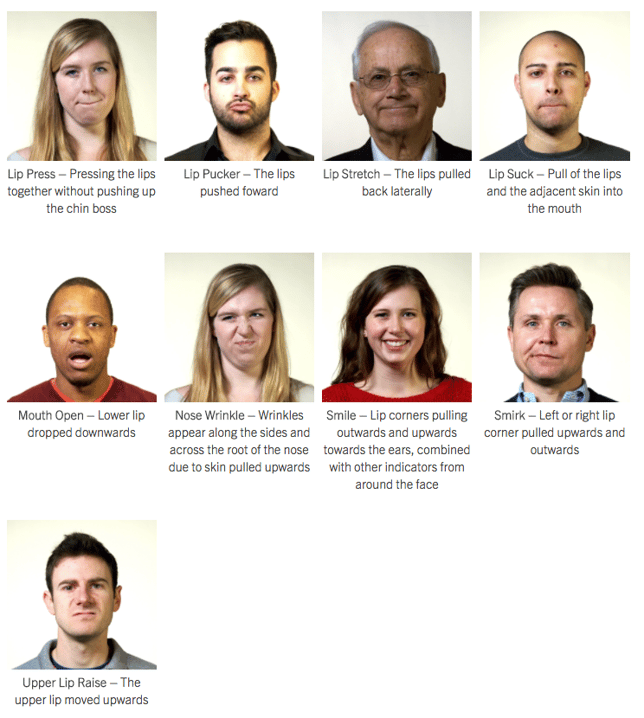

When you use the Affectiva SDK in your applications, you will receive facial expression output in the form of nine emotion metrics and 20 facial expression metrics.

Emotions

Furthermore, the SDK allows for measuring valence and engagement, as alternative metrics for measuring the emotional experience. Let’s explain further on what we mean by engagement & valence.

How do we calculate engagement?

Engagement, also referred to as Expressiveness, is defined as a measure of facial muscle activation that illustrates the subject’s emotional engagement. The range of values is from 0 to 100. Engagement is a weighted sum of the following facial expressions:

- Inner and outer brow raise.

- Brow furrow.

- Cheek raise.

- Nose wrinkle.

- Lip corner depressor.

- Chin raise.

- Lip press.

- Mouth open.

- Lip suck.

- Smile.

How do we calculate valence?

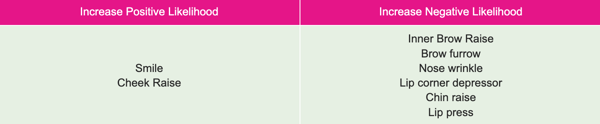

Valence is a measure of the positive or negative nature of the recorded person’s experience. The range of values is from -100 to 100. The Valence metric likelihood is calculated based on a set of observed facial expressions:

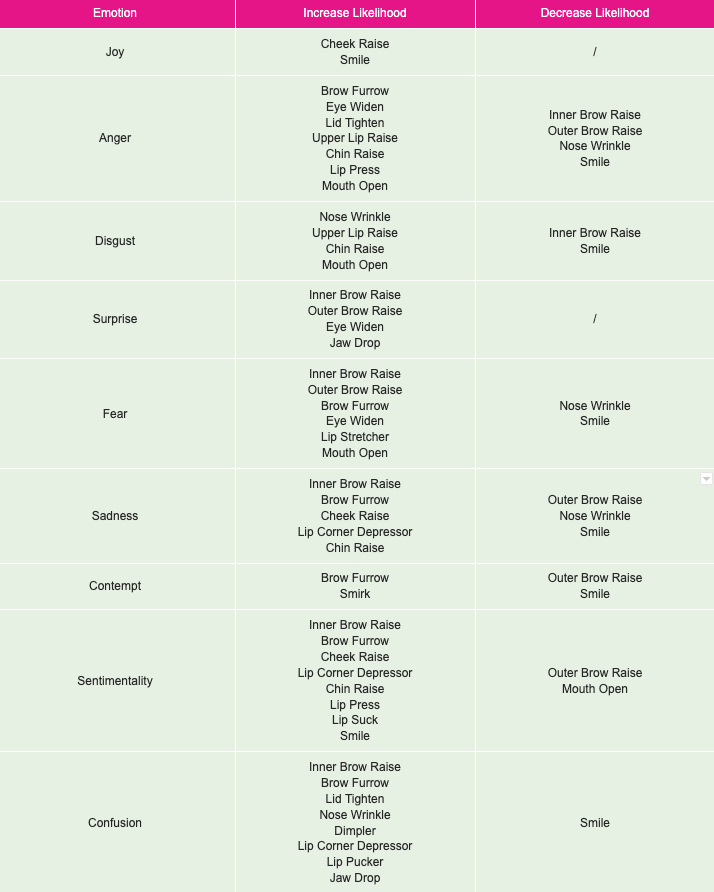

How do we map facial expressions to emotions?

The emotion predictors use the observed facial expressions as input to calculate the likelihood of an emotion. Our facial expression to emotion mapping builds on EMFACS mappings developed by Friesen & Ekman. Additionally, our Sentimentality and Confusion metrics are unique to Affectiva and derived from custom analysis based on the video content that people are reacting to.

A facial expression can have either a positive or a negative effect on the likelihood of an emotion. The following table shows the relationship between the facial expressions and the emotions predictors.

Using the Metrics

Emotion and Expression metrics scores indicate when users show a specific emotion or expression (e.g. a smile) along with the degree of confidence. The metrics can be thought of as detectors: as the emotion or facial expression occurs and intensifies, the score rises from 0 (no expression) to 100 (expression fully present).

In addition, we also expose a composite emotional metric called valence which gives feedback on the overall experience. Valence values from 0 to 100 indicate a neutral to positive experience, while values from -100 to 0 indicate a negative to neutral experience.

Determining Accuracy

We continuously train and test our expression metrics to provide the most reliable and accurate classifiers.

Our expression metrics are trained and tested on very difficult datasets. We sampled our test set, composed of hundreds of thousands of facial frames, from more than 6 million facial videos. This data is from more than 90 countries, representing real-world, spontaneous facial expressions, made under challenging conditions, such as varying lighting, different head movements, and variances in facial features due to ethnicity, age, gender, facial hair and glasses.

How do we measure our accuracy?

Affectiva uses the area under a Receiver Operating Characteristic (ROC) curve to report detector accuracy as this is the most generalized way to measure detector accuracy. The ROC score values range between 0 and 1 and the closer the value to 1 the more accurate the classifier is. Many facial expressions, such as smile, brow furrow, mouth open, eye closure and brow raise have a ROC score of over 0.9.

Some, more nuanced, facial expressions, which are much harder for even humans to reliably identify, include lip corner depressor, eye widen and inner brow raise. These have a ROC score of over 0.8.

Face Tracking and Head Angle Estimation

The SDKs include our latest face tracker which calculates the following metrics:

- Facial Landmarks Estimation:The tracking of the cartesian coordinates for four facial landmarks.

- Head Orientation Estimation: Estimation of the head position in a 3-D space in Euler angles (pitch, yaw, roll).

- Interocular Distance:The distance between the two outer eye corners.

Ready to Get Started?

We know that this 101 post is a lot to digest - and now you are an Emotion AI Expert! Detecting emotions with technology is a highly complex challenge to address, but I’m sure you can imagine the many applications when integrated correctly. We also wanted to convey the level of sophistication that goes into accurately mapping facial expressions into emotions.

Also, in the interest of transparency, we wanted to be sure that you understood not only what features and functionality we have available, but how we arrived at them. You can always reference our science resources section to learn more about our technology, or check out our emotion recognition patents we have been awarded.