With all the information we have at our fingertips, people expect their cars to provide a similar experience. They want an in-cabin environment that’s adaptive and tuned to their needs in the moment. To fulfill that, automakers and mobility service providers need a deep understanding of what’s happening with people in the vehicle.

We invited Zach Dusseau, Consulting Associate at global market research company IHS Markit to speak on the state of the in-cabin sensing market at the Emotion AI Summit. Zach’s talk was just ahead of the Improving Road Safety panel, and was intended to help frame that discussion with other thought leaders in the automotive space.

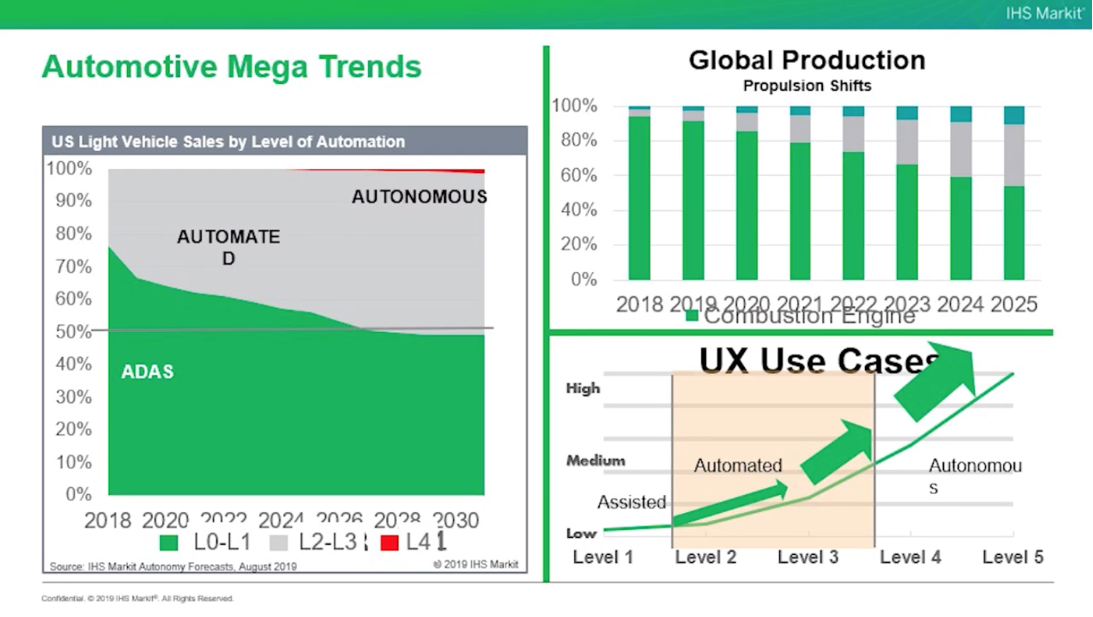

His role on the IHS Markit auto advisory team helped Dusseau illustrate IHS Markit’s top-line automotive mega trends in the volatile shift to autonomy, electrification, and mobility. His talk discussed key insights into future in-cabin monitoring use-cases, assessed critical technologies, and addressed deployment challenges. Here are a few of his key points:

The Trends: Where the Automotive Industry is Heading

Autonomy and electrification are some key influencers with UX use cases. If we look at the following graph on US Light Vehicle Sales by level of automation, cars from level 0-1 (vehicles with ADAS features, seen as green on this chart) have about 75% of market share in 2018. This is predicted to drop down to below 50% market share in 2030. Cars at levels 2-3 (seen in grey on this chart) gain 50% market share by 2030. Level 4 cars, the first stage of true autonomous driving, begins to pick up a little sliver of volume (shown in red at the top right of this chart) with about 1% to 2% of market share in 2030.

Common Themes for Automotive UX Developers

- Deliver and delight without sensory overload. This concept is all about managing and decreasing the brain's cognitive workload while also increasing the valuable information available to the user.

- People are comfortable with what they know, and consistency is key. When building features, OEMs must determine which tech these features need, but they also need to start with the tech and see which features they enable. For example, one camera for one feature is not sustainable: cost is a key factor. Every piece of tech that you put into the vehicle optimally needs to be multi-purpose. With Over The Air (OTA) updates and new products and services being pushed out, OEMs are increasingly adding new products to existing vehicles—as long as the hardware is adequate to accommodate.

- Personalization with user profiles. The customer ideally wants to have one unit user interface to control their whole life.This means bringing favorite apps, settings, entertainment, or vehicle seating configurations into the vehicle.

- Context. Understanding context is huge among OEMs and Tier-1s. Are four friends getting inside the vehicle? A family of four? Four business associates? The vehicle needs to recognize this context and how it affects the vehicle's internal environment with these scenarios.

- Connectivity. This represents the near seamless transition between tasks, regardless of your location. For example, your car resumes playing Netflix right where you left off, or allows you to continue writing that work email, as you enter the vehicle.

- In-cabin monitoring. Driver monitoring for purposes of enhancing road safety will continue to be a priority over entertainment.

What will a ride look like?

Dusseau broke the future ride into pre-ride, in-ride and end-ride scenarios. The process starts with a pre-ride: where before you ever go near the vehicle, the vehicle's been fueled and maintained. It arrives at the user's location automatically, opens the door, recognizes the user, adjusts the interior settings based on the user profile, and the contextual situation.

For the in-ride experience, this may start with 20 minutes of hands-free driving. With this new free time, what do users want to be doing? One company suggested they can lead the driver through 20 minutes of a meditation cycle.

Lastly, the end-ride experience consists of the vehicle dropping the user off at the optimal location, parking itself or readying itself for its next trip.

An underlying challenge to this whole process is maintaining user connectivity. The user needs to always be connected and this may mean that the provider has to subsidize this connection.

The Bottom Line

Safety in ADAS will remain a priority, and UX developers must still consider drivers to be actively engaged within the vehicle for at least the next decade. This is because many automotive companies face challenges like integrating new technology into old architecture, or coming up with a new platform for connected vehicles. These issues will not be addressed until self-driving vehicles are fully here. The pre-ride, in-ride, end-ride scenario will ideally become a sustainable cycle optimized for maximum utilization and adaptable for multiple use cases and scenarios. Companies need to drive revenues into this process to maintain profitability.

For the IHS Markit full talk by Zach Dusseau, download the Emotion AI Summit 2019 Recordings here.