Guest blog by: Goran Vuksic, iOS Developer at Tattoodo

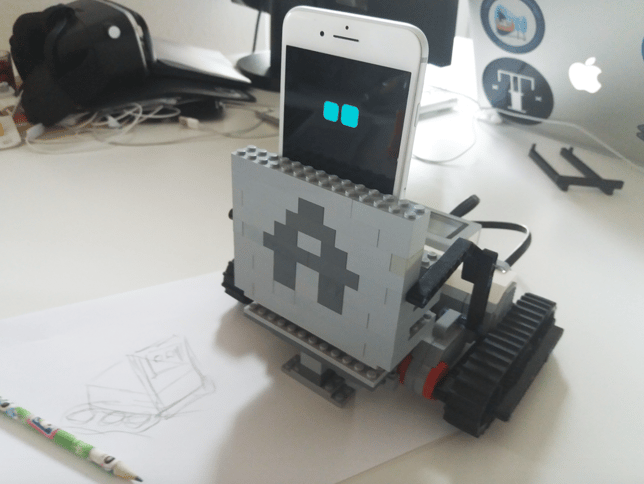

I often work on some small weekend projects to entertain my 10 years old son and I had an idea to build a cute little robot like the Anki's Cozmo. With this project I tried to combine different technologies and some gadgets that I had at home, so I decided to use LEGO Mindstorms and iPhone for hardware and for interaction with the robot I used Affectiva's SDK and Speech API to bring it to life.

First challenge was to build the robot out of LEGO and LEGO Mindstorms EV3 set. I connected Mindstorms programmable brick with motors and wheels so the robot could move. I then built a frame around it with LEGO bricks for it to be able to hold the iPhone in front. I created few images in Photoshop that will be displayed on the iPhone as robot's face, and was happy with the final product.

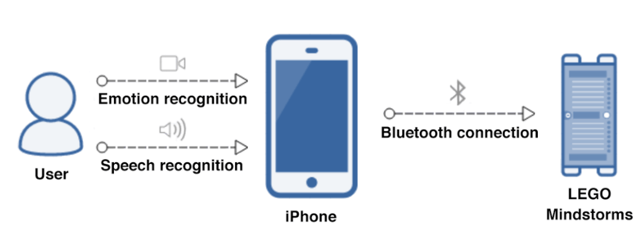

Communication between iPhone and LEGO Mindstorms set is via Bluetooth. I did a search on GitHub that revealed several projects demonstrating how to do it. I made a few adjustments to one of those projects and I added it to my XCode project. I then made sure the robot could move when I sent it commands from iOS by adding a speech API and creating voice commands.

The robot can now respond to voice commands such as "Come here", "Go away" and a few other voice commands I created. I also added few audio clips, so not only would each action activate LEGO Mindstorms motors, but the iPhone played a different audio clip. Even though this was fun, I still wanted to make the robot interactive, so I added Affectiva's SDK to the project so the robot could recognize user emotions and expressions.

First, I wanted robot to be in a "sleep" mode until it detects that someone’s face is in front of it. To make this visual, I changed pictures on iPhone so the robot’s eyes visually went from "sleeping" to "normal". With Affectiva's SDK ability to detect emotions and expressions I could recognize when user is smiling, winking or when they are angry, so I made the robot respond to these emotions. Now, the robot will respond with a happy or sad face and play a different sound.

This whole project was so much fun and I was happy with the end result. The robot I built was interactive: it could sense user presence, respond to voice commands, respond to user emotions and expressions. The use of Affectiva's SDK is simple and intuitive and with it developers can build interesting emotion aware applications.

Thanks for reading, feel free to connect with me on LinkedIn or follow me on Twitter to see what project I'll be working on next.

About Goran Vuksic

Goran is an application developer with wide knowledge about various technologies and programming languages. In application development, he has 13 years of experience; in the last 6 years he has focused on mobile applications. Goran worked on various projects for notable clients, and applications he on were featured on sites such as Forbes, The Next Web, MacWorld and more. In the last few years, he has attended several hackathons and other mobile developer competitions on which his skills and work were recognized and awarded (Startup Weekend Copenhagen, AngelHack Warsaw, ChupaMobile developer competitions, and more) He currently works as an iOS Developer at Tattoodo.

Goran is an application developer with wide knowledge about various technologies and programming languages. In application development, he has 13 years of experience; in the last 6 years he has focused on mobile applications. Goran worked on various projects for notable clients, and applications he on were featured on sites such as Forbes, The Next Web, MacWorld and more. In the last few years, he has attended several hackathons and other mobile developer competitions on which his skills and work were recognized and awarded (Startup Weekend Copenhagen, AngelHack Warsaw, ChupaMobile developer competitions, and more) He currently works as an iOS Developer at Tattoodo.