By: Ashley McManus, Marketing Manager; featuring Goran Vuksic, iOS developer at Tattoodo

Toys accompany children’s imaginations, serving as critical props in their dreams and adventures. While many toys rely on children to set the scene, the majority do not sense and adapt to the child’s emotions...but what if they could?

As toys continue to evolve, it begs the question of where the next wave of toy technology will take us. As an emotion AI company, we can’t help but speculate on the future ways of keeping the little ones entertained in the years to come: what if that toy could not only recognize a child’s emotional behavior, but adapt and react to these emotions -- and at the same time, enhance learning and development?

One developer is getting close. Goran Vuksic, an Affectiva SDK user, designed a project around this concept of emotion-enabled toys. He got the idea while his son was playing with the ever-popular LEGO toys, and thought of a unique way to bring the brand’s minifigures to life with a little help from our SDK. You can read more about this cool project at his full blog post here: LEGO Minifigures and Augmented Reality.

We interviewed Goran on how his project works, the process of developing it, and what he thinks is the future of emotion-enabled toys and applications.

What does your project do and how does it work?

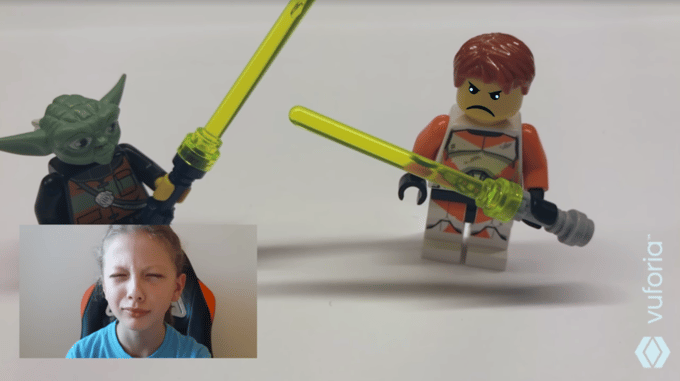

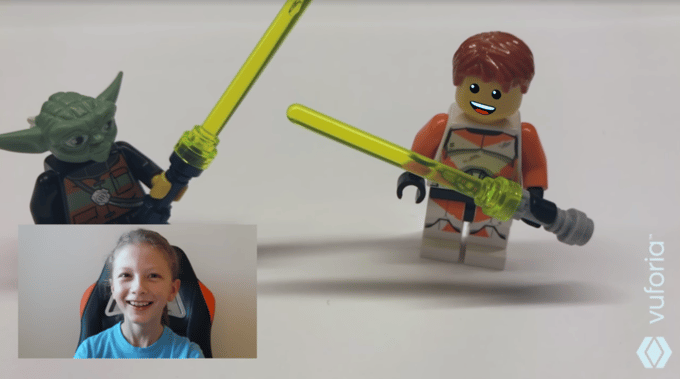

My project uses emotion recognition in combination with augmented reality. I tried to "give life" to LEGO minifigures by using Affectiva and Vuforia SDKs. I was able to have the software recognize user emotions and then render specific emotions onto the LEGO faces in the augmented reality view.

Where did you get the idea to build it?

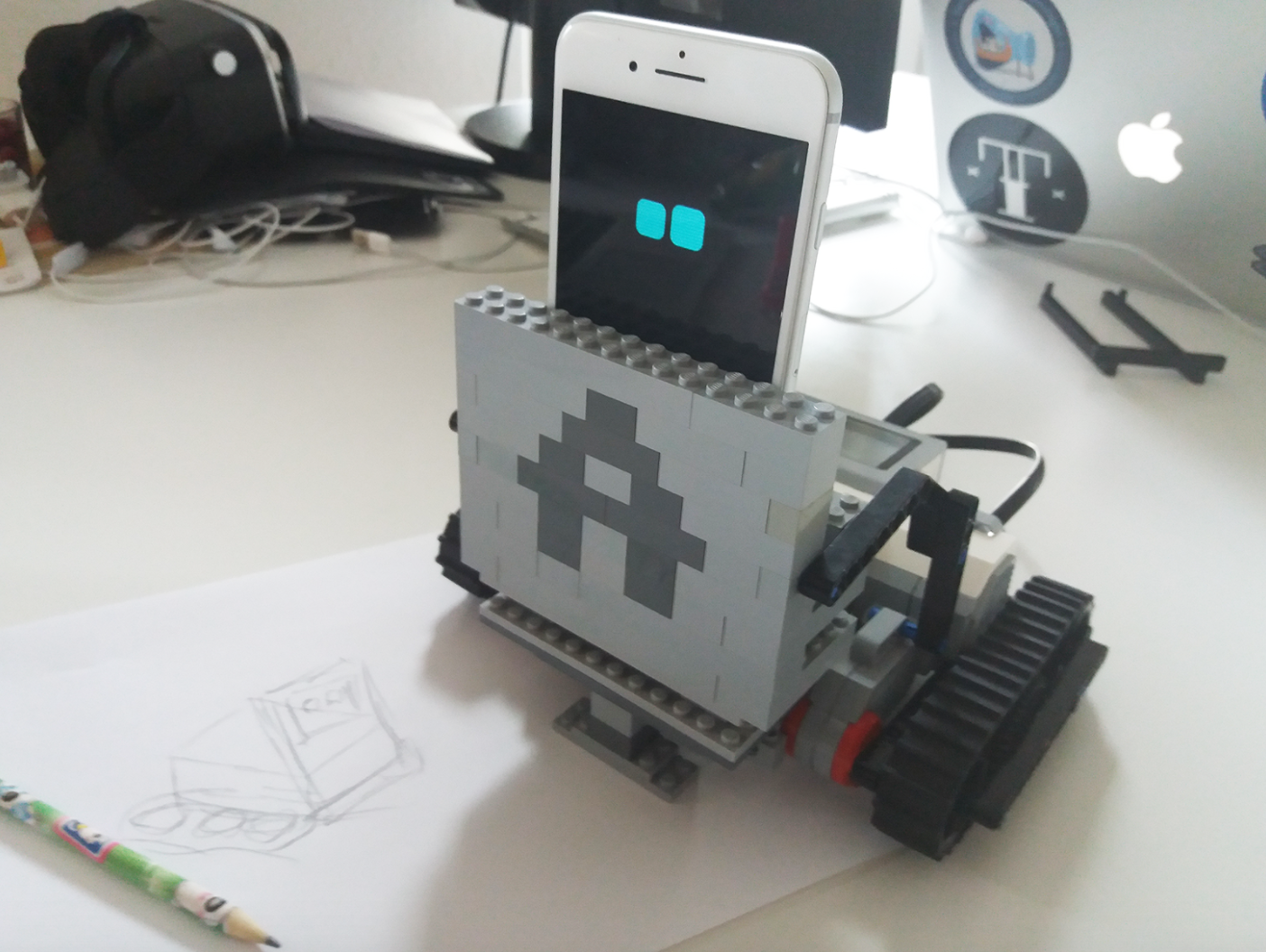

My son is 9 years old and he plays with LEGO toys a lot. I'm also a big fan of augmented reality, so whenever I have some free time I try to build out different AR projects. I got the idea of using LEGO minifigures when I noticed you can turn their head 180 degrees around so that the yellow face is blank. I thought it would be fun if I could build something that recognized user emotion with the Affectiva SDK to render different faces based on emotion recognition.

What was your process in building it?

I decided to use Unity3d since it is really simple-to-use game engine and it allows quick prototyping. First I created recognition patterns for several LEGO minifigures and I made sure Vuforia SDK could recognise them. I then used Affectiva SDK to recognize emotions - and based on this, I made logic so that when you made a face, the corresponding expression graphic would render onto the blank LEGO face (happy, sad, angry, etc.)

What role does emotion and emotion technology play in the concept of your project?

Affectiva SDK played a main role in this project’s emotion recognition, and without it I wouldn't be able to achieve these cool features.This project also got a lot of attention since this is new approach on how to use augmented reality in combination with emotion recognition. The emotion recognition capability is fast and awesome, and when you see that LEGO minifigure is mimicking your emotions you have to laugh. My whole family had a lot of fun testing this project.

Which features of your project are you most excited about?

With this project I managed to use emotion detection in combination with augmented reality in order to bring a child’s toy to life. As kids, we all play with and talk to toys, so this was kind of like making a child’s dream come true. I really hope that we see more solutions like this in future where kids can interact with their toys in new and intuitive ways.

What is the next step for your project? Are there any plans to build another project like this in the future?

This project can be upgraded so that it also uses a voice input - and with that, I am hoping to render even more variations of minifigure face expressions (i.e, remember how minifigures talk in LEGO Movie?). I hope I'll have enough time in near future to try it out! Otherwise, I was using Affectiva SDK earlier in some fun projects and I find it really interesting - I'll for sure make more projects like this in the future. You can follow my blogs on LinkedIn to see what I’ll be working on.

Do you have any other advice for those looking to build similar projects of their own?

There are a lot of ways to use emotion recognition and augmented reality. Be creative and combine different technologies and SDKs!

About Goran Vuksic

Goran is an application developer with wide knowledge about various technologies and programming languages. In application development, he has 13 years of experience; in the last 6 years he has focused on mobile applications. Goran worked on various projects for notable clients, and applications he on were featured on sites such as Forbes, The Next Web, MacWorld and more. In the last few years, he has attended several hackathons and other mobile developer competitions on which his skills and work were recognized and awarded (Startup Weekend Copenhagen, AngelHack Warsaw, ChupaMobile developer competitions, and more) You can read more about his LEGO AR project at his full blog.

Goran is an application developer with wide knowledge about various technologies and programming languages. In application development, he has 13 years of experience; in the last 6 years he has focused on mobile applications. Goran worked on various projects for notable clients, and applications he on were featured on sites such as Forbes, The Next Web, MacWorld and more. In the last few years, he has attended several hackathons and other mobile developer competitions on which his skills and work were recognized and awarded (Startup Weekend Copenhagen, AngelHack Warsaw, ChupaMobile developer competitions, and more) You can read more about his LEGO AR project at his full blog.