How can AI help next generation vehicles become more human? At the Emotion AI Summit this year, we invited thought leaders in the automotive space to come and speak on this topic. In the workshop track, we invited Dr. Nils Lenke, Senior Director Innovation Management at Nuance Communications to discuss the areas of improvements in communication between humans and our vehicles.

During his presentation, he covered how multi-modality and non-verbal signals must be addressed in-cabin to improve communications between humans and their vehicles, as well as a number of case studies (including Toyota Concept-I car and Mercedes MBUX) to really experience what this adaptive behavior can look like. (Dr. Lenke was also invited to present on one of Affectiva’s webinars on Auto AI: click here to see that recording from earlier this year.)

Dr. Lenke then handed it off to his colleague Adam Emfield, who leads the Design & Research, Innovation, and In-Vehicle Experience (DRIVE) Lab at Nuance Automotive. His team studies what the experience of future drivers or passengers will look like in the cars of tomorrow. His background is in Human Factor psychology and industrial engineering, and he tries to think about the way users are going to interact with technology. His presentation really tackled whether or not users actually buy-in to the use cases outlined in Dr. Lenke’s presentation. In order to predict this, Adam discussed the experimental studies he conducted to answer this question.

Why Car Simulation Experiments?

Adam leads the drive lab at Nuance, and is focused on trying to answer questions and challenges about what users are struggling with—or what they might like—both today and where they want things to go in the future. This makes great sense in the context of the Nuance partnership with Affectiva, because emotions are fuzzy. Emotions are hard to nail down for humans, and it can be difficult to know how to react to them. So trying to think what we are going to do with emotions and how we are going to use them in a car (besides what we can think of passively) requires getting some input from actual users.

What Causes Emotion Within the Car, and How Do You Want Your Car to Respond?

To start, Adam engaged the audience in some interactive Q&A around emotions within a vehicle setting. That is, if you are in a car, what might trigger happiness for you? What about surprise, or anger?

The audience had a myriad of responses—music, good conversation, or a great view from the car. When prompted whether the car should do anything, people responded that the car could turn up the volume if it sensed you enjoyed the music playing.

For surprise, examples included seeing a spider, an accident, another driver unexpectedly, a pedestrian appearing between two cars, a bicyclist, seeing a deer or having your coffee spill. Desired responses of the car to those surprise actions ranged from slowing the vehicle down, stabilizing the car so it doesn’t enable the driver to overreact (such as jerking the wheel suddenly), to verbally reassuring the driver that everything is OK.

The third example asked attendees what made them angry in the car. Answers like traffic jams, getting cut off, running out of gas, running late, to misinterpretation of HMI system to voice commands were common. They surmised possible responses of the car could be to play some kind of calming music, adjusting ambient lighting, a cold shower (in the fully autonomous use case) and offer to call one of your contacts.

These examples illustrated perfectly how Nuance approached conducting their experiments.

The Methodology: Setting Up Driving Simulations

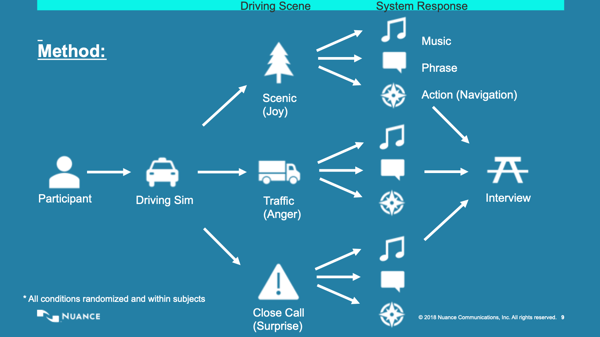

These ideas served as a great foundation into what Nuance wanted to test: Adam’s group took a small subset of these questions (among the hundreds they would have liked to answer) and brought 18 people into the lab to test their responses. They started by getting a feeling for what these people were doing in their car generally, when they were using voice enabled technology, and times in which they ended up having strong emotions both positive and negative. Then they were placed in a driving simulator, where they were taken through a few different situations.

The challenge for Nuance was in order to see how people respond to feeling certain emotions within the car, they needed to evoke those emotions within participants somehow. A further complication is that you cannot really understand if those emotions were evoked successfully until after the study was completed.

So for this particular study, they selected a number of videos they imagined would elicit certain emotions. For joy, they used a scenic drive video. To evoke anger, they used videos of being stuck (and staying stuck) in traffic. Then for surprise, the video showed the driving being cut off suddenly—and almost getting into an accident. For each of these three incidents, the lab would then give three different treatments to see how the people responded. Responses included playing a song or music of some sort, the vehicle saying a phrase, or executing some action (such as rerouting to avoid the traffic)—all of which were intended to help resolve the situation.

The Findings

When asked how they felt about emotion detection, 3 out of 4 drivers who reported that they were content with the system monitoring their emotion and having some response to them. About 6% of the respondents did get frustrated with it, and about 22% of people just weren't quite sure yet.

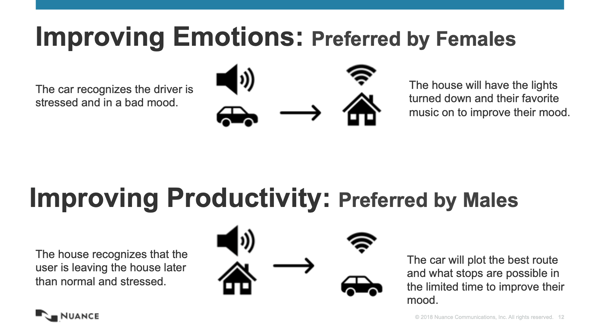

Nuance also found that there were two groups of people in their sample: the first wanted the system to respond by trying to improve their emotions directly, such as by preparing / offering to turn on their lights at home, get the temperature at their home right, and have their favorite music playing when they got home. The second group wanted the car to make them more productive - especially if they were angry or surprised. They wanted the car to make their life easier by making decisions for them, such as by potentially simplifying their route, or helping them to avoid traffic. What was also interesting to note was that every respondent on the “improving my emotion” side was female, and every respondent “improve my productivity” was male.

The Bottom Line:

In the end, the survey respondents wanted their systems to learn their behavior and customize to them, and to vary upon their situation (for example, not doing the same thing every time I am happy). Regardless of the use case and preferences, they all wanted the primary goal in non-autonomous vehicles to improve safety above all else - which they identified as the #1 thing they expected emotion detection could help with.

To see all data from this experiment, watch the full Emotion AI Summit workshop featuring Dr. Nils Lenke and Adam Emfield of Nuance on “How AI Helps Next Generation Vehicles Become More Human” by downloading the recording here:

.jpg?width=600&height=400&name=shutterstock_1017273892%20(1).jpg)