%20(1).jpg?width=600&height=400&name=shutterstock_468015065%20(1)%20(1).jpg)

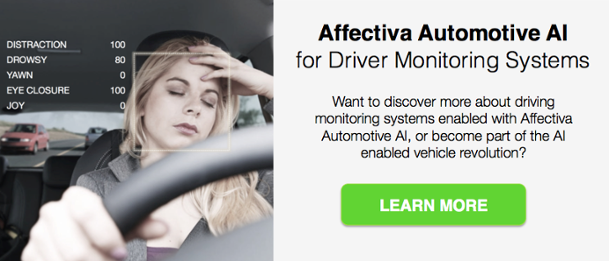

Last month, the third Sunday of November was observed as World Day of Remembrance for Road Traffic Victims. The numbers (according to the CDC) are dire: in the US alone, there are more than 1,000 injuries and nine fatalities caused by distracted driving every day. In addition, it is estimated that up to 6,000 fatal crashes each year may be caused by drowsy drivers.

More and more car manufacturers are deploying driver monitoring systems, designed to detect driver impairment so vehicles can adapt or intervene to avoid accidents. At Affectiva, we are working with leading OEMs and Tier 1s on next generation driver monitoring. Our Human Perception AI detects complex and nuanced human emotions and cognitive states such as drowsiness and distraction. Learn more about it in this video:

Powering Affectiva Automotive AI with Deep Learning

Deep learning allows Affectiva to model more complex problems with higher accuracy than other machine learning techniques. It solves a variety of problems such as classification, segmentation and temporal modeling. It also allows for end-to-end learning of one or more complex tasks jointly including: face detection and tracking, speaker diarization, voice-activity detection, and emotion classification from face and voice.

Building multi-modal Human Perception AI is incredibly complex problem:

-

Multi-modal. Humans manifest their cognitive and emotional states in a variety of ways including their tone of voice and with their face.

-

Many expressions. Facial muscles generate hundreds of facial expressions of emotion. Speech also has many different dimensions – from pitch and resonance, to melody and voice quality.

-

Highly nuanced. Expressions and cognitive states can be very nuanced and subtle (an an eye twitch, for example) or pause patterns when speaking.

-

Temporal lapse. As human mental states unfold over time, algorithms need to measure moment by moment changes to accurately depict emotional states.

-

Non-deterministic. Changes in facial or vocal expressions can have different meanings depending on the person’s context at that time.

-

Massive data. Human Perception AI algorithms need to be trained with massive amounts of real world data that is collected and annotated.

In addition to modeling these complexities, these Human Perception AI models need to run accurately, on-device, in real time. Heuristic rule-based systems where humans code for all possible patterns and scenarios is not possible: Machine Learning is a must.

How Does it Work?

Deep learning is an exciting area of research within Machine Learning which allows AI companies like Affectiva to model more complex problems with higher accuracy than other machine learning techniques. In addition, deep learning solves a variety of problems (classification, segmentation, temporal modeling) and allows for end-to-end learning of one or more complex tasks jointly. The specific tasks addressed include face detection and tracking, speaker diarization, voice-activity detection, and cognitive state classification from face and voice.

To solve these diverse tasks, Affectiva requires a suite of deep learning architectures:

-

Convolutional Neural Networks (CNN)

-

Multi-task (multi-attribute) networks for both regression and classification

-

Region proposal networks

-

Recurrent Neural Networks (RNN)

-

Long Short-Term Memory (LSTM)

-

Deep Recurrent Non-Negative Matrix Refactorization (DR-NMF)

-

CNN + RNN nets

We don’t just use off the shelf network architectures, but focus our efforts on building custom layers and architectures designed for facial and vocal analysis tasks. Learn more about our technology in this technical deep dive webcast with our Director of Applied AI.

The Bottom Line

Affectiva Automotive AI is the first in-cabin sensing AI that leverages deep learning to identify, in real time from face and voice, complex and nuanced emotional and cognitive states of a driver in a vehicle. It can help OEMs and Tier 1 suppliers build advanced driver monitoring systems through comprehensive human perception AI—and drive down fatality numbers as a result.

Want to discover more about Affectiva Automotive AI enabled driving monitoring systems or become part of the AI enabled vehicle revolution?