In this series, we are offering a peek behind the curtain on all things science at Affectiva, from a technical perspective. In this blog post, we will talk about what the science team is comprised of, why what we do is difficult and why it's important. Ultimately, we want to bring emotional intelligence to technology - and we do that through our patented software that measures emotions and cognitive face from face and voice.

WHAT DOES AFFECTIVA DO?

Affectiva detects the face, and we use Deep Learning to analyze pixels on the face to estimate 20 different expressions independently that can combine to hundreds of expressions and seven emotional states. We can infer many targets from someone’s face, such as age, gender, ethnicity - using ANY camera.

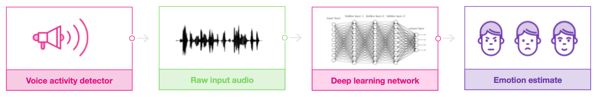

From the voice perspective, we take in a raw audio signal of the environment and feed it through a voice activity detector. Then, our deep learning networks analyze this raw audio input to come up with a series of emotion estimates - such as arousal, gender, etc. The Deep Networks in our software are trained to parse, analyze and recognize the raw audio signal instead of trying to extract summarizations.

EMOTION AI IS A COMPLEX PROBLEM

When dealing with our technology and machine learning, a question we run into often is: "Isn't it just about the data? Isn't it the same algorithms developed at these academic conferences, and you just point it at label data?"

The answer is yes - to a certain degree. But when it comes to emotions, it is more complicated because of the following reasons:

MULTI-MODAL

We express emotions not just through our faces, but also through voice and gestures. So we need look at all of these aspects combined. Our current SDK looks at the face, and we're starting to go into speech to help complement this.

MANY EXPRESSIONS

In order to analyze a face, there’s a lot of different expressions that come into play. It is not only the 17 individual facial muscle movements of the face, but also all the hundreds of different ways you can combine them. Every emoji at every intensity with all the variations in between can be derived from people’s faces.

HIGHLY NUANCED

Emotions and expressions are also highly nuanced. Which means that the difference between person A to person B is a lot higher than the difference between a person and a person doing a subtle smile. And those subtleties are what Affectiva SDK is looking to pick up while ignoring the differences of a baby versus an old man, or a young girl versus an old man, or an an African American versus someone with a different ethnic background. It is important to ignore all of these big visible changes across faces and pick up really the subtle things.

TEMPORAL LAPSE

Temporal lapse also speaks to complexity. Like multimodal, you have to pay attention to all these different channels. Likewise, it is important to look at each contributing matter of body language and facial expressions; not only to what they do, but also the sequencing and the timing of them. For example, if a person yells and start smiling afterwards vs. smiling and yelling afterwards. Both sequences involve yelling and both involve smiling; yet, to seek the order in which they happened might be really important as it changes the meaning.

NON-DETERMINISTIC

Context matters. Research shows that making the same facial expression, whether or not your hand is holding up a banana peel or holding up a fist, changes how we interpret that facial expression each time. Knowing people on a personal level could probably factor into the context of how they express emotion. For example, if we know the person tends to smile when they're extremely frustrated - like Meryl Streep in Devil wears Prada - we would be able to properly assess that person's emotional state by analyzing their voice, their speech, AND taking into account the context in which this person is being observed. Are they listening to music? Are they having a conversation? Everything could be taken into account, even the person him/herself.

BEYOND EMOTIONS

Extending beyond emotions means that the same technology, the same background, the same modeling could be used for even more than just joy. It could be used for whether or not someone's distracted, confused or frustrated. The spectrum of things we could possibly target, analyze, and measure is almost as wide as the human taxonomy for describing a person's internal, mental, cognitive, and emotional state.

MASSIVE DATA

We have the World’s Largest Emotion Data Repository: from 87 different countries, more than 50,000 hours of audio-video (6.5M faces analyzed, 2B frames, more than 1100+ hours of automotive data). It is also always growing: each week we receive more than 200 hours of video data from our in-market partners and more than 100 hours of automotive in-car video data. Emotion AI algorithms need to be trained to analyze these massive amounts of real world data that are collected and annotated every day.

CONTEXT

Taking into account what else the person is doing and their environment - that is the context. For example, seeing someone on a computer doesn’t define the context for us. Are they playing a game or video-chatting? Checking work emails or listening to music? Reaching the level of understanding of what is it people are doing and where they are looking becomes really important. In order to create a full picture for Emotion AI, we need to understand all of these complexities.

AFFECTIVA APPROACH TO ADDRESSING EMOTION AI COMPLEXITIES

We solve these Emotion AI complexities in a similar way that machine learning does. We need a lot of data to train it, and we need really complex algorithms that factor in multiple signals, that factor in time and that are able to model these complex, nuanced states. We need the infrastructure to be able to train it, such as labeling, modeling software infrastructure, and skillful use of some open-source frameworks. And lastly, we need a skilled team in machine learning that understand emotion and the complex nuances, to then be able to put the first three together to produce models that work. Part of our science team’s success is that we don’t have anyone who doesn't understand the nuance of human emotion or to give feedback to the labeling team after analyzing the data. In order to solve the complexities of Emotion AI machine learning, we need a team that will be able to intelligently inform new kinds of previously yet-to-be-discovered algorithms.

Stay tuned and don’t miss the next post of this Science Deep Dive Series, in which we will share with you how we address these complexities by using our robust and scalable data strategy. In the meantime, you can watch the full tech deep dive webcast by our Director of Applied AI here.