The National Highway Traffic Safety Administration says that 94% of serious crashes are the result of human error. And with the recent developments in driver assistant technology and driver monitoring systems, the industry is aiming is to apply the technical capabilities in an attempt to bring that percentage down. The New York Times commented on the anticipated “Computer Chauffeur,” indicating the secret sauce of these systems is robust AI that is slowly integrating in cars of the future to save lives. But how?

Driver Monitoring Systems Must Detect Speech and Facial Expressions

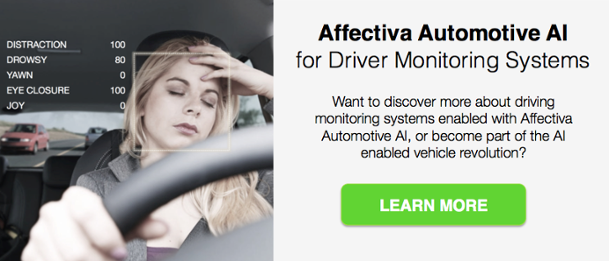

It’s not enough to simply deploy eye / gaze tracking or head position technology. These systems do not present a holistic view on what is happening with the human driver. Incorporating AI and deep learning takes driver state monitoring to the next level, analyzing both face and voice for levels of driver impairment caused by physical distraction, mental distraction from cognitive load or anger, drowsiness and more. With this information, the car infotainment or ADAS can be designed to take appropriate action.

For example, these systems can monitor levels of driver fatigue and distraction to enable appropriate alerts and interventions that correct dangerous driving. If a driver begins texting, starts nodding off at the wheel, an audio or display alert can instruct the driver to remain engaged; the seat belt can vibrate to jolt the driver to attention.

In semi-autonomous vehicles, awareness of driver state also builds trust between people and machine, enabling an eyes-off-road experience and helping solve the “handoff” challenge.

Enter Affectiva Automotive AI

Using in-cabin cameras and microphones, Affectiva Automotive AI analyzes facial and vocal expressions to identify expressions, emotion and reactions of the people in a vehicle. (Note that we do not send any facial or vocal data to the cloud, it is all processed locally.) Our algorithms are built using deep learning, computer vision, speech science, and massive amounts of real-world data collected from people driving or riding in cars.

Affectiva Automotive AI includes a subset of facial metrics from our Emotion SDK that are relevant for automotive use cases. These metrics are developed to work in in-cabin environments, supporting different camera positions and head angles. We have also added vocal metrics.

Deep neural networks analyze the face at a pixel level to classify facial expressions and emotions. Also, deep networks analyze acoustic-prosodic features (such as tone, tempo, loudness, pause patterns) to identify speech actions.

Facial Expressions

- A deep learning face detector and tracker locates face(s) in raw data captured using optical (RGB or Near-IR) sensors

- Our deep neural networks analyze the face at a pixel level to classify facial expressions and emotions

Speech

- Our voice activity detector identifies when a person starts speaking

- Our AI starts analyzing after the first 1.2 seconds of speech, sending raw audio to the deep learning network

- The network makes a prediction on the likelihood of an emotion or speech event being present

The Bottom Line

Affectiva Automotive AI is the first in-cabin sensing AI that identifies, in real time from face and voice, complex and nuanced emotional and cognitive states of a vehicle’s occupants - specifically, the driver. It can help OEMs and Tier 1 suppliers build advanced driver monitoring systems through comprehensive human perception AI—and can help to save lives.

Want to discover more about Affectiva Automotive AI enabled driving monitoring systems or become part of the AI enabled vehicle revolution?